What is the best way to assess the quality of a health app?

What a fundamental question and one that is raised by an increasing number of clinicians, payers, as well as multiple other stakeholders in the healthcare industry.

What a fundamental question and one that is raised by an increasing number of clinicians, payers, as well as multiple other stakeholders in the healthcare industry. This single topic has been the heart of a rising number of scientific publications (7'929 since 2008, to be precise).

At Therappx, we’ve been looking at all the frameworks employed by organizations or studied by research groups worldwide to assess app quality since the ‘90s. After this analysis, we came up with a pretty bold statement:

None of the frameworks published since the ’90s ever proved that it could unearth patient-facing Digital Health Tools (apps, devices, etc.), or DHT, that really generate health outcomes, nor value (defined as “health outcomes achieved per dollar spent”).

Quality and value are two different things. Quality may be a predictor of value, but only when you’re confident an assessment framework effectively uncovers great value DHT, which none did as of today.

Of course, high-quality DHT may translate into value more frequently. However, value depends more on the patient’s needs than the quality of tools (e.g., the number of features). Therefore, it doesn’t mean that recommending high-quality DHT to all types of patients will translate into value.

Thus, advising on the reimbursement, prescription or recommendation of DHT based on the sole fact that it is of high-quality (based on MARS, APPLICATION, or any other types of assessment frameworks) is suboptimal.

How to assess value?

This section explains how we achieve validation with Therappx’s complete and segmented framework and how it was successful in uncovering the best value DHT during the pandemic. In the next section, we’ll further discuss our observations regarding our framework.

To assess the value of a given DHT, we emphasize that we need to validate how it achieves outcomes for real patients when using its current version in real-world settings. Because of that critical consideration, decisions regarding a DHT need to be based both on quality and Real-World Evidence (RWE). Patients provide Real-Word Data through connected devices (also called Digital Biomarkers) or clinically-validated questionnaires (also called Patient-Reported Outcome Measures, or PROMs, and Patient-Reported Experience Measures, or PREMs), which get compared to find out if a DHT effectively provides outcomes (evidences).

Mixing both quality and value assessments allows for effective and bullet-proof decisions to identify best-in-class DHT. To make such an ideal framework possible, we follow these steps:

- Step 1. Look for quality-related no-go criteria, meaning components of DHT that may be harmful to patients (from a privacy, usability or quality of content point-of-view, per example). For clarity purposes, we refer to this step as the no-go framework in this article.

- Step 2. Allow patients to interact with DHT that went through Step 1. Collect RWE in this process;

- Step 3. Identify mismatches between DHT and sub-populations of your patients and remove DHT that did not perform well in any of your patients sub-population. Keep only the ones that translate into value.

- Step 4. Track all substantial changes made on any of the DHT you assessed. Determine if you should repeat Steps 1 to 3 based on the nature of the changes.

It is how we’ve been assessing DHT at Therappx. It is also how we power our flagship product, called CORE. Through this PaaS, our clients can access, at any given time, 58+ updated data points on a vast number of DHT available in Canada.

In the last couple of years, we made pretty interesting observations by adopting this methodology:

Key learnings on which we base our first product :

1. Being Evidence-informed + Intelligence-based is the way to go

At Therappx, we decided that our no-go framework would be proprietary to account for Canada’s particularities (e.g. data hosting, localization, language, units of measure, etc.). Moreover, we split our methodology in half and mobilize two types of expertise to make it efficient:

- A robot and a documentation expert are responsible for a first assessment. They both pool a large quantity of data on all available DHT in Canada. The objective is to identify potential problems from a regulatory/data governance/usability perspective to quickly remove a DHT from Therappx’s ecosystem, based on non-clinical criteria.

- Then, properly-trained clinicians perform a second assessment. They look at how well the remaining DHT aligns with or could improve their patients’ care, following a standardized assessment. This step allows us to remove DHT based on clinical relevance and perceived therapeutic value and classify each DHT according to their level of therapeutics intervention, powering patient-centric decisions.

We leveraged this framework in the early days of the pandemic. We successfully ended with 25 out of the 1175 apps available in both App Stores that adapted their app for COVID stress & anxiety. We achieved this in less than ten days. Our team continued its work, and we added new apps for more than 13 conditions in our flagship product, CORE.

2. It is theoretically possible to predict the value

Thanks to the fact that a lot of DHT share similarities (e.g. in terms of UI and features), there is a possibility to make progress as an organization to predict value only by evaluating quality (Step 1). However, it requires that you have a clear idea of valuable DHT for your patient’s population and their characteristics (Step 3).

In other words, you may skip Steps 2 and 4, theoretically, when you acquire enough experience/data through the process. In this case, you’d become quite certain that the DHT quality assessment methodology you use can uncover the subgroup of DHT that generates value for your patient population.

The model we described is currently tested by Therappx, with the help of the National Research Council of Canada (NRC). Our mutual goal is to create an RWE-fed prediction mechanism for DHT available on the Canadian market.

3. App Libraries and quality frameworks do only half of the job

Since the first certification program for apps was launched back in 2013 (and quickly suspended due to the targeting of included apps), many organizations launched their selection of DHT (aka libraries). Most recently, pharmacy benefit managers (PBM) such as CVS have been involved in that space to support their clients choosing among pre-vetted DHT.

Some libraries focus on a specific patient’s need (PsyberGuide, GoodThinking, MindTools, etc.), while 40+ others include DHT available in all health conditions.

Some of them decided to borrow a methodology that was the subject of peer-reviewed publications (e.g. vicHealth with mARS and ABACUS). In contrast, at least 48 others created their framework.

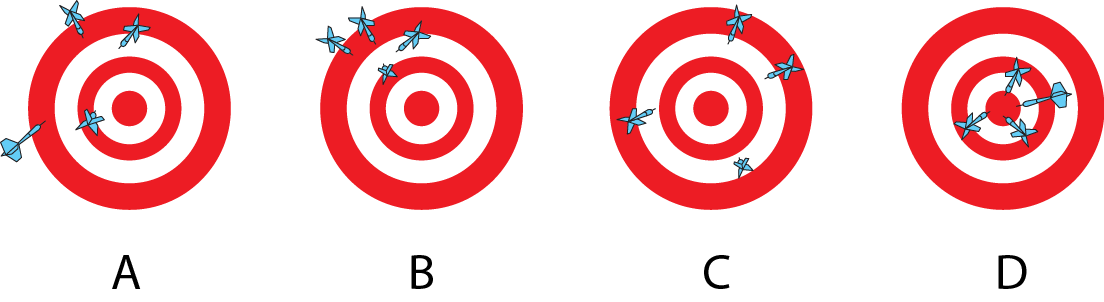

As stated previously, all these organizations use some subjective criteria to assess quality without validating that these criteria translate into value, even those who use frameworks that were subject to peer-reviewed publications. That is because researchers only tend to look at inter-reviewers disparities by measuring internal consistency, split-half reliability, test-retest reliability or interrater reliability (ref. 1, 2, 3, 4, 5 and 6) to find out if a framework is reliable.

We experienced this firsthand in the early days of Therappx with our group of 20+ DHT reviewers. Raters tend to suffer from central tendency bias, meaning that they will provide too many DHT with average scores. Sure, the data they provided was consistent, but we were nowhere near finding DHT that generated real value. And it seems like we were not alone. Information found in CORE is broad enough to make sure this decision is not based only on the appreciation of a small group of reviewers.

4. Northwestern University researchers were (almost) right

As part of our systematic reviews of DHT assessments, Northwestern University’s (NWU) concept of CEEBIT struck us.

CEEBIT, which stands for Continuous Evaluation of Evolving Behavioral Intervention Technologies, is a framework organizations can use to leverage RWE generated by a group of DHT to rapidly eliminate DHT that “demonstrate inferior or poorer outcomes” in real-life settings.

In such a system, “[…] all [DHT] are maintained [in the ecosystem] until a [DHT] meets an a priori criterion for inferiority and is eliminated”.

We found at Therappx that even if that system is pure genius, it doesn’t account for the heterogeneity of the population in which we introduce a DHT. We think it is suboptimal to eliminate a DHT from the ecosystem until you are confident it reached inferiority in all types of patients.

As an example, a given DHT could be inferior when you look at RWE generated by your population as a whole. However, it may also be the best performer in a given subpopulation (e.g. a man aged 65 and over) only because this subgroup of patients is underrepresented.

Instead, we think that a DHT should be maintained available to a given persona of your population until it meets an a priori criterion for inferiority in this population.

Perhaps, this needs to be performed by a machine, as the number of personas/variables may quickly become overwhelming. Also, this methodology stresses the need to discover dangerous DHT before entering the system (with a no-go framework). It may take more time/patients before it reaches inferiority and is removed.

Are you interested in learning more about Therappx CORE? Send us an email!